Building a High-Performance RAG System with PostgreSQL and DeepSeek

Introduction

- The Challenge: Need for efficient semantic search systems

- The Solution: RAG (Retrieval-Augmented Generation) with PostgreSQL

- Why PostgreSQL + pgvectorscale is an excellent alternative to dedicated vector databases

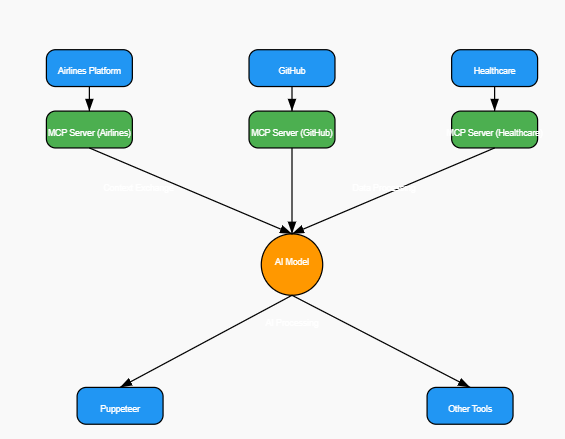

Technical Architecture

1. Technology Stack

- PostgreSQL with pgvectorscale

- deepseek-Coder via Ollama

- Model deepseek-r1:14b

- Python implementation

- Docker containerization

2. Core Components

- Vector storage system

- Similarity search system

- Response synthesis system

- Database interface

Step-by-Step Implementation

1. Environment Setup

services:

timescaledb:

image: timescale/timescaledb-ha:pg16

container_name: timescaledb

environment:

- POSTGRES_DB=postgres

- POSTGRES_PASSWORD=password

ports:

- "5432:5432"

volumes:

- timescaledb_data:/var/lib/postgresql/data

restart: unless-stopped

volumes:

timescaledb_data:2. Vector Storage

- Database structure

- Document insertion process

- Embedding generation with DeepSeek-Coder

- Indexing for optimal performance

Generating and Managing Embeddings with DeepSeek and Timescale Vector

Understanding the Role of Embeddings in AI Systems

Embeddings are a fundamental component of AI-driven applications, particularly in natural language processing (NLP)and retrieval-augmented generation (RAG). They allow us to transform unstructured text into dense vector representations, capturing semantic meaning in a way that machines can process efficiently.

In this project, we leverage DeepSeek Coder (via Ollama) to generate embeddings and Timescale Vector (a PostgreSQL extension) to store and retrieve them efficiently. This combination enables high-performance vector search and semantic retrieval.

Why Use DeepSeek Coder for Embeddings?

Most AI applications rely on cloud-based APIs (e.g., OpenAI or Cohere) for generating embeddings, but this introduces privacy concerns, latency issues, and costs.

By using DeepSeek Coder locally with Ollama, we achieve: ✅ Full data privacy (no external API calls)

How to Install and Run DeepSeek Coder Locally with Ollama

Step 1: Install Ollama

Ollama is required to run DeepSeek Coder locally.

For macOS & Linux:

Run the following command in your terminal:

curl -fsSL https://ollama.com/install.sh | shStep 2: Pull the DeepSeek Coder Model

Once Ollama is installed, download DeepSeek Coder by running:

ollama pull deepseek-coderStep 4: Use DeepSeek Coder for Embeddings

If you want to generate embeddings locally, use the ollama.embeddings API:

Python Code to Generate Embeddings:

import ollama

response = ollama.embeddings(

model="deepseek-coder",

prompt="What is Retrieval-Augmented Generation?"

)

print(response["embedding"])Output:

A list of floating-point numbers representing the vectorized embedding of the input text.

class VectorStore:

"""A class for managing vector operations and database interactions."""

def __init__(self):

"""Initialize the VectorStore with settings, OpenAI client, and Timescale Vector client."""

self.settings = get_settings()

self.embedding_model = "deepseek-coder" # Modèle local Ollama

self.vector_settings = self.settings.vector_store

self.vec_client = client.Sync(

self.settings.database.service_url,

self.vector_settings.table_name,

self.vector_settings.embedding_dimensions,

time_partition_interval=self.vector_settings.time_partition_interval,

)

def get_embedding(self, text: str) -> List[float]:

"""

Generate embedding for the given text using Ollama (DeepSeek R1:14).

Args:

text: The input text to generate an embedding for.

Returns:

A list of floats representing the embedding.

"""

text = text.replace("\n", " ")

start_time = time.time()

try:

response = ollama.embeddings(

model=self.embedding_model,

prompt=text

)

embedding = response['embedding']

embedding = embedding[:1999]

print(f"Generated embedding size: {len(embedding)}") # Vérification

except requests.exceptions.RequestException as e:

logging.error(f"Failed to get embedding: {e}")

return []

elapsed_time = time.time() - start_time

logging.info(f"Embedding generated in {elapsed_time:.3f} seconds")

return embeddingPreparing Data for Vector Storage

The dataset, stored in faq_dataset.csv, contains frequently asked questions (FAQs) along with their corresponding answers and categories. Before storing this data in Timescale Vector, we need to:

- Convert questions and answers into embeddings using DeepSeek Coder.

- Assign each record a unique identifier (

UUID v1), capturing the time of insertion. - Store metadata, such as the category and timestamp.

The prepare_record function takes care of this process:

from datetime import datetime

import pandas as pd

from database.vector_store import VectorStore

from timescale_vector.client import uuid_from_time

# Initialize VectorStore

vec = VectorStore()

# Read the CSV file

df = pd.read_csv("../data/faq_dataset.csv", sep=";")

# Prepare data for insertion

def prepare_record(row):

"""Prepare a record for insertion into the vector store.

This function creates a record with a UUID version 1 as the ID, which captures

the current time or a specified time.

Note:

- By default, this function uses the current time for the UUID.

- To use a specific time:

1. Import the datetime module.

2. Create a datetime object for your desired time.

3. Use uuid_from_time(your_datetime) instead of uuid_from_time(datetime.now()).

Example:

from datetime import datetime

specific_time = datetime(2023, 1, 1, 12, 0, 0)

id = str(uuid_from_time(specific_time))

This is useful when your content already has an associated datetime.

"""

content = f"Question: {row['question']}\nAnswer: {row['answer']}"

embedding = vec.get_embedding(content)

return pd.Series(

{

"id": str(uuid_from_time(datetime.now())), # Generates time-based UUID

"metadata": {

"category": row["category"],

"created_at": datetime.now().isoformat(), # Track insertion time

},

"contents": content,

"embedding": embedding,

}

)

records_df = df.apply(prepare_record, axis=1)Creating Tables and Indexing Embeddings

Once the FAQ records are vectorized and assigned a UUID, they are stored in Timescale Vector. The following steps ensure that the data is:

- Persisted in a structured table.

- Indexed for fast similarity search using Hierarchical Navigable Small World (HNSW) indexing.

# Create tables and insert data

vec.create_tables()

vec.create_index() # HNSWIndex

vec.upsert(records_df)Managing AI Model Interactions with LLMFactory and DeepSeek

The LLMFactory class is a centralized interface for interacting with DeepSeek-R1 , ensuring structured responses, error handling, and flexibility. It abstracts the complexity of sending prompts, processing responses, and handling errors while supporting structured output through Pydantic models.

With configurable parameters like temperature and max_tokens, developers can fine-tune model behavior for RAG pipelines, chatbots, or AI-powered assistants.

How to Install and Run DeepSeek-R1:14B Locally with Ollama

DeepSeek-R1:14B is a powerful large language model (LLM) optimized for code generation, reasoning, and AI applications. Running it locally with Ollama ensures privacy, cost efficiency.

ollama run deepseek-r1:14bclass LLMFactory:

"""Factory class to initialize different LLM providers dynamically (only supports DeepSeek via Ollama)."""

def __init__(self):

self.settings = get_settings().deepseek

if not self.settings:

raise ValueError("❌ DeepSeek configuration is missing in settings.")

self.client = self._initialize_client()

def _initialize_client(self) -> Any:

"""Initialize the client for DeepSeek via Ollama."""

return {"model": self.settings.default_model} # Ollama ne nécessite pas d'API Key

def create_completion(

self, response_model: Optional[Type[BaseModel]], messages: List[Dict[str, str]], **kwargs

) -> Any:

"""Generate a completion response using DeepSeek via Ollama.

Args:

response_model: A Pydantic model to parse the response (optional).

messages: List of chat messages.

Returns:

Parsed response if response_model is provided, otherwise raw text.

"""

response = ollama.chat(

model="deepseek-r1:14b",

messages=messages,

options={

"temperature": kwargs.get("temperature", 0.7),

"num_ctx": kwargs.get("max_tokens", 4096),

},

)

# Extraire la réponse texte brute

if "message" in response:

raw_text = response["message"]["content"]

else:

logging.error(f"❌ Error from Ollama: {response}")

return None

logging.info(f"📝 Ollama Response: {raw_text}")

# 🛠️ Tentative de parsing en JSON si un response_model est fourni

if response_model:

try:

json_response = json.loads(raw_text) # Convertit la réponse en JSON

parsed_response = response_model.parse_obj(json_response) # Vérifie la structure

return parsed_response

except (json.JSONDecodeError, ValidationError) as e:

logging.error(f"❌ Error parsing response: {e}")

return None

return raw_text # Retourne du texte brut si pas de `response_model`Enhancing Response Parsing in AI-Powered FAQ Systems

The Synthesizer class is designed to generate structured responses from an AI assistant using DeepSeek via Ollama. A key challenge in AI-generated text is that responses often contain unstructured output, extra text, or formatting issues, making it difficult to parse them cleanly.

To solve this, we implement a robust JSON extraction mechanism that ensures the AI returns valid and structured responses, which can be directly used in an application.

Defining a Clear AI Behavior with a Structured System Prompt

The SYSTEM_PROMPT plays a crucial role in ensuring that the AI assistant delivers precise, structured, and contextually relevant responses in an e-commerce FAQ system. This carefully designed instruction set guides the AI's behavior and enforces a consistent response format, making it easier to parse and integrate into applications.

SYSTEM_PROMPT = """

# Role and Purpose

You are an AI assistant for an e-commerce FAQ system. Your task is to synthesize a coherent and helpful answer

based on the given question and relevant context retrieved from a knowledge database.

# Response Format:

You MUST return the response in a valid JSON format following this structure:

{

"thought_process": ["step 1", "step 2", "step 3"],

"answer": "Your final answer here",

"enough_context": true # or false

}

# Guidelines:

1. Provide a clear and concise answer to the question.

2. Use only the information from the relevant context to support your answer.

3. The context is retrieved based on cosine similarity, so some information might be missing or irrelevant.

4. Be transparent when there is insufficient information to fully answer the question.

5. Do not make up or infer information not present in the provided context.

6. If you cannot answer the question based on the given context, clearly state that.

7. Maintain a helpful and professional tone appropriate for customer service.

8. Adhere strictly to company guidelines and policies by using only the provided knowledge base.

"""Defining the AI’s Role and Purpose

The first section of the prompt explicitly assigns a role to the AI:

“You are an AI assistant for an e-commerce FAQ system. Your task is to synthesize a coherent and helpful answer based on the given question and relevant context retrieved from a knowledge database.”

This instruction ensures that the AI:

- 🛒 Focuses on e-commerce and doesn’t generate unrelated responses.

- 🔎 Uses only retrieved knowledge base context instead of hallucinating information.

- 🗂 Synthesizes a response coherently for better readability.

Enforcing a JSON-Based Response Format

Enforcing a JSON-Based Response Format

One of the biggest challenges with AI models is inconsistent output formatting. To ensure structured and machine-readable responses, the prompt enforces a strict JSON output format:

{

"thought_process": ["step 1", "step 2", "step 3"],

"answer": "Your final answer here",

"enough_context": true

}Generating Responses with AI and Cleaning JSON Output

The generate_response() method ensures that:

- The AI receives a strict system prompt defining the JSON structure.

- The response is cleaned with

extract_json()before parsing. - Errors are logged and handled gracefully if the response is invalid.

@staticmethod

def generate_response(question: str, context: pd.DataFrame) -> SynthesizedResponse:

"""Generates a structured response from the AI model.

Args:

question: The user's query.

context: Relevant knowledge base context.

Returns:

A SynthesizedResponse object with structured data.

"""

context_str = Synthesizer.dataframe_to_json(

context, columns_to_keep=["content", "category"]

)

messages = [

{"role": "system", "content": Synthesizer.SYSTEM_PROMPT},

{"role": "user", "content": f"# User question:\n{question}"},

{

"role": "assistant",

"content": f"# Retrieved information:\n{context_str}",

},

]

llm = LLMFactory()

raw_response = llm.create_completion(response_model=None, messages=messages)

try:

cleaned_response = extract_json(raw_response) # Extract valid JSON

json_response = json.loads(cleaned_response) # Convert to JSON

return SynthesizedResponse.parse_obj(json_response) # Validate with Pydantic

except (json.JSONDecodeError, ValidationError) as e:

logging.error(f"❌ Error parsing response: {e}")

return SynthesizedResponse(

thought_process=["Error processing response"],

answer="I could not process the AI's response.",

enough_context=False

)Enhancing AI-Powered Search with Vector Embeddings and Advanced Filtering

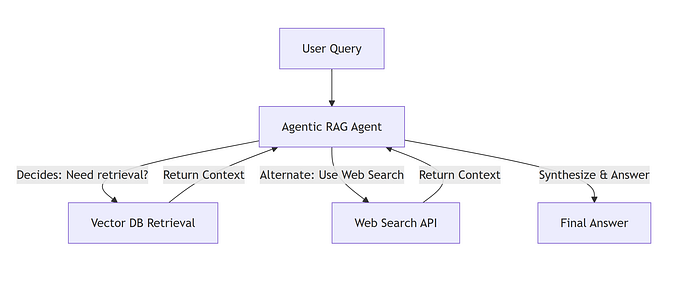

he integration of vector search with an AI-powered synthesizer enables a highly efficient and intelligent retrieval-augmented generation (RAG) system. This approach combines semantic search, metadata filtering, and time-based queries to provide precise, context-aware responses from an AI assistant.

Understanding the Retrieval and Response Process

The code first initializes a VectorStore, which stores DeepSeek-generated embeddings. When a user asks a question, the system:

- Searches for relevant context in the vector database (

vec.search()). - Synthesizes an AI-powered response using the retrieved information (

Synthesizer.generate_response()). - Prints a structured answer along with the AI’s reasoning process.

Example: Retrieving Context for a Shipping Question

relevant_question = "What are your shipping options?"

results = vec.search(relevant_question, limit=3)

response = Synthesizer.generate_response(question=relevant_question, context=results)

print(f"\n{response.answer}")

print("\nThought process:")

for thought in response.thought_process:

print(f"- {thought}")

print(f"\nContext: {response.enough_context}")Output:

env_app) MacBook-Pro:app $ python similarity_search.py

2025-03-09 15:24:29,072 - INFO - HTTP Request: POST http://127.0.0.1:11434/api/embeddings "HTTP/1.1 200 OK"

Generated embedding size: 1999

2025-03-09 15:24:29,075 - INFO - Embedding generated in 0.154 seconds

2025-03-09 15:24:29,187 - INFO - Vector search completed in 0.113 seconds

2025-03-09 15:24:39,039 - INFO - HTTP Request: POST http://127.0.0.1:11434/api/chat "HTTP/1.1 200 OK"

We offer standard (3-5 business days) and express (1-2 business days) shipping options.

Thought process:

- Identify relevant context about shipping options

- Extract specific shipping durations from the context

- Formulate a clear and concise answer

Context: True

(env_app) MacBook-Pro:app $What’s happening here?

- The system sends a request to DeepSeek Coder via Ollama to convert the input question into a dense vector representation.

- The AI assistant analyzes the retrieved context and generates a structured response.

- The response is concise and informative, reflecting the retrieved shipping information.

- Thought Process

- Validating Context Relevance

Why This is a Powerful AI Search System

✔ Retrieves information based on meaning, not just keywords

✔ Prevents hallucinations by relying on real data

✔ Explains its reasoning step by step

✔ Validates whether enough information was available

✔ Optimized for real-time responses with low latency

This hybrid AI system (Vector Search + DeepSeek Coder) makes it ideal for chatbots, knowledge bases, and RAG applications. 🚀

Explore the Full Project on GitHub

This AI-powered RAG (Retrieval-Augmented Generation) system integrates DeepSeek Coder, vector search with Timescale Vector, and advanced filtering techniques to deliver accurate, context-aware responses.

💡 Check out the full implementation and source code on GitHub:

👉 GitHub Repository: deepseek-rag-psql

🔹 Learn how to build a scalable AI-powered search system

🔹 Explore vector embeddings, filtering, and metadata-based search

🔹 See how to use DeepSeek Coder for intelligent response synthesis

🚀 Start building your own AI-powered knowledge assistant today!