Building Serverless in Native Kubernetes

What this is

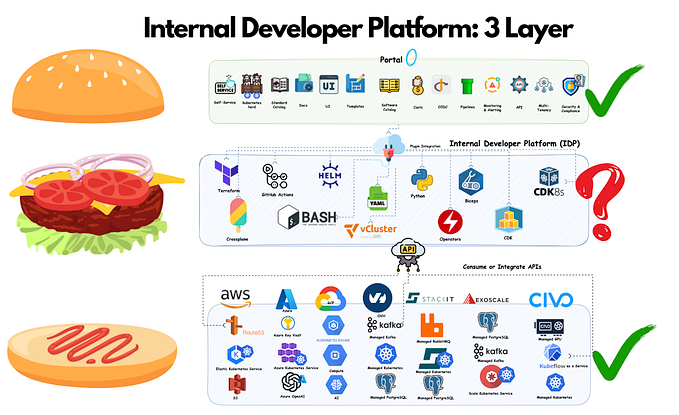

A step by step tutorial about one of the easiest and most straight forward ways to have a simple Kubernetes Native Serverless platform bthat lets you deploy small bits of code without having to worry about the underlying infrastructure plumbing. It leverages Kubernetes resources to provide auto-scaling, API routing, monitoring, troubleshooting and supports every programming language. (Nodejs,Go,python,..).

To start, I advise you to install Docker Desktop

- Docker intro from the official docs (always a good place to start): https://docs.docker.com/get-started/

- Kubernetes intro from the official docs: https://kubernetes.io/docs/tutorials/kubernetes-basics/

- Another good one about Docker: https://docker-curriculum.com/

- A nice online course about Kubernetes from Edx: Introduction to Kubernetes

Check our installation

Let us try out a few things to ensure that we can make sense of what has got installed. Execute the following commands in a terminal

$ kubectl versionClient Version: version.Info{Major:”1", Minor:”14+”, GitVersion:”v1.14.10-dispatcher”, GitCommit:”f5757a1dee5a89cc5e29cd7159076648bf21a02b”, GitTreeState:”clean”, BuildDate:”2020–02–06T03:31:35Z”, GoVersion:”go1.12.12b4", Compiler:”gc”, Platform:”darwin/amd64"}Server Version: version.Info{Major:”1", Minor:”19", GitVersion:”v1.19.7", GitCommit:”1dd5338295409edcfff11505e7bb246f0d325d15", GitTreeState:”clean”, BuildDate:”2021–01–13T13:15:20Z”, GoVersion:”go1.15.5", Compiler:”gc”, Platform:”linux/amd64"}

Install Helm

Install Microfunctions

# Helm

$ helm repo add microfunctions https://microfunctionsio.github.io/microfunctions-helm$ helm install microfunctions microfunctions/microfunctions -n microfunctions --create-namespace --values=https://raw.githubusercontent.com/microfunctionsio/microfunctions/main/Install/values-local-ingress.yamlNAME: microfunctionsLAST DEPLOYED: Sat Apr 3 20:26:17 2021NAMESPACE: microfunctionsSTATUS: deployedREVISION: 1TEST SUITE: NoneNOTES:1. Get the application URL by running these commands:https://microfunctions.local1. Get the microfunctions-controller URL by running these commands:https://microfunctions.local/microfunctions/apis3. Login with the following credentials below to see your microfunctions platform :echo Username: owner@microfunctions.localecho Password: $(kubectl get secret — namespace microfunctions microfunctions-init -o jsonpath=”{.data.owner-password}” | base64 — decode)

To add a host name, microfunctions.local , with an IP address 127.0.0.1 , add the following line in the /etc/hosts file:

##

# Host Database

#

# localhost is used to configure the loopback interface

# when the system is booting. Do not change this entry.

##

127.0.0.1 localhost

127.0.0.1 microfunctions.localAccess to the Platform

go to the https://microfunctions.local

To get password typed the command

echo Password: $(kubectl get secret — namespace microfunctions microfunctions-init -o jsonpath=”{.data.owner-password}” | base64 — decode)Adding a Kubernetes cluster

Install dependencies on the cluster

MicroFunctions is a combination of open source tools.

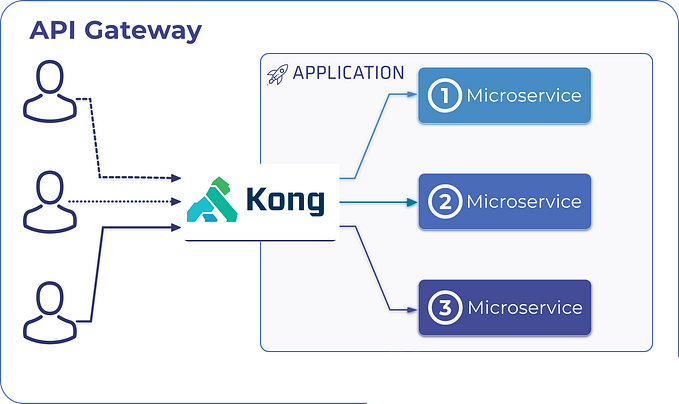

- Kong Ingress Controller implements authentication, transformations, and other functionalities across Kubernetes clusters

- cert-manager controller. issuing certificates from a variety of sources

- Kubeless Kubernetes-native serverless framework

Add Namespace

Add functions

Managing Resources for Containers

When you specify a Pod, you can optionally specify how much of each resource a Container needs. The most common resources to specify are CPU and memory (RAM); there are others.

When you specify the resource request for Containers in a Pod, the scheduler uses this information to decide which node to place the Pod on. When you specify a resource limit for a Container, the kubelet enforces those limits so that the running container is not allowed to use more of that resource than the limit you set. The kubelet also reserves at least the request amount of that system resource specifically for that container to use.

Choose your preferred language

microfunctions platform supports multiple programming languages.(Nodejs,Go,python,..).

Enable autoscaler

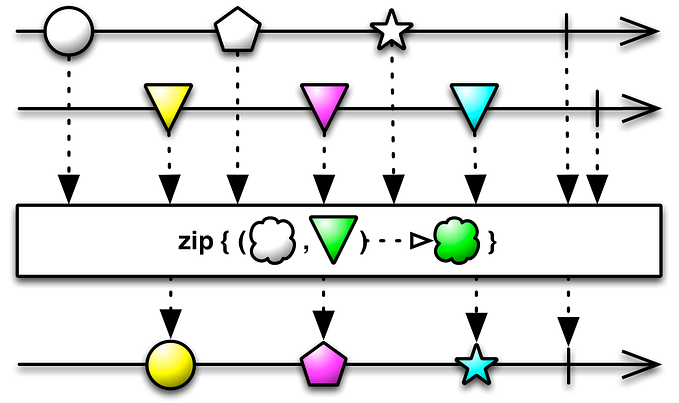

The Horizontal Pod Autoscaler automatically scales the number of Pods in a replication controller, deployment, replica set or stateful set based on observed CPU utilization (or, with custom metrics support, on some other application-provided metrics). Note that Horizontal Pod Autoscaling does not apply to objects that can’t be scaled, for example, DaemonSets.

The Horizontal Pod Autoscaler is implemented as a Kubernetes API resource and a controller. The resource determines the behavior of the controller. The controller periodically adjusts the number of replicas in a replication controller or deployment to match the observed metrics such as average CPU utilisation, average memory utilisation or any other custom metric to the target specified by the user.

Deploy functions